Blog Details

- Home

- Blog Details

- Member

- January 10, 2026

The Transition from Natural Language to Natural Interaction

Foresight: The Transition from Natural Language to Natural Interaction

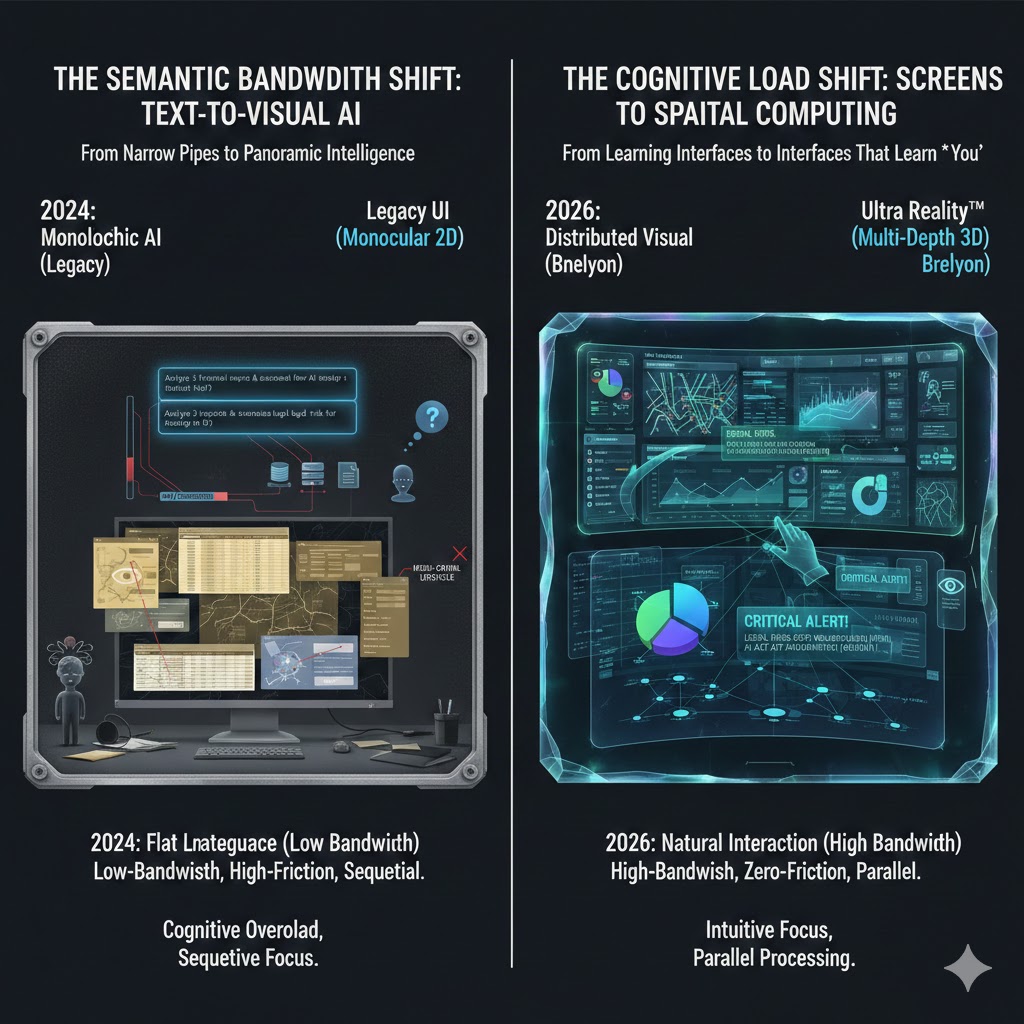

By early 2026, the artificial intelligence landscape has reached a "Post-Prompt" inflection point. While the previous five years were defined by the mastery of Natural Language Processing (NLP)—where humans struggled to translate their intent into the specific syntax of text boxes—the current era is dominated by Natural Interaction (NI). This shift, spearheaded by pioneers like Brelyon, has transformed the computer screen from a flat, reactive display into an Observation Manifold. In this competing future, the AI does not wait for a typed command; it derives intent directly from the user's "Action Genome"—the unique sequence of visual behaviors such as gaze duration, drag-and-drop friction, and pixel manipulation patterns.

The Visual Engine as the New Operating System

The core of this foresight lies in the obsolescence of the traditional API-led integration.

From 2D Consumption to Multi-Depth Participation

As we move into 2027, the physical modality of interaction has evolved from "looking at" data to "participating in" it. Brelyon’s Ultra Reality™ displays have introduced a Non-Euclidean Perception Buffer, using monocular depth to organize information into spatial layers.

The "Action Genome" and Autonomous Upskilling

The final stage of this transition is the emergence of Vision-to-Action models. These systems treat every user interaction as a "training token," progressively building a library of high-value professional behaviors. By 2026, companies no longer "train" employees on software; the software, through the Visual Engine, "coaches" the employee. As the engine observes an expert surgeon or data scientist, it compresses their workflow into a "Genome" that can then be used to provide real-time, context-aware overlays for novices. This achieves a state of Continuous Motion Automation, where the interface itself becomes an adaptive coach, evolving its layout and assistance in real-time to match the user's rising skill level.